This interview initially appeared in The Avalanche Journal, Vol. 137, Winter 2025

By Alex Cooper

In 2003, Grant Statham was hired by Parks Canada to implement the recommendations of the Backcountry Avalanche Risk Review that followed the 2003 Connaught Creek avalanche. His work led to the introduction of the Avalanche Terrain Exposure Scale, improvements to the public avalanche danger scale, and the development of the Conceptual Model of Avalanche Hazard.

I spoke to him about the evolution of the Conceptual Model into a tool that is an essential part of the forecasting workflow for avalanche professionals in North America and beyond. What follows is a transcript that was edited for clarity and length. Watch the video below fothe full interview.

Alex Cooper: Before getting into the Conceptual Model, I was wondering if you can just talk a little bit about what the forecasting process was like before it.

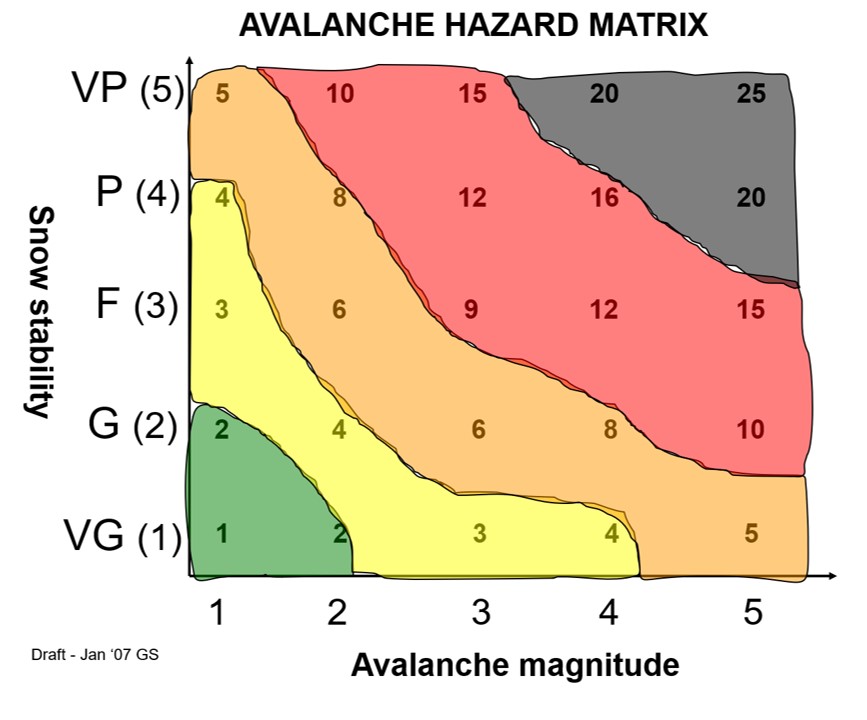

Grant Statham: That seems like a long time ago now. For the longest time, we did it a specific way. We generally were formed up around the snow stability system and we used a snow stability rating checklist for our assessments. This is what the CAA was teaching and all of InfoEx did it this way, too. In the back of OGRS, you’ll see the snow stability rating system.

We did a lot of the same stuff we do today. We would look at avalanche observations, weather observations, snowpack, but we didn’t have a specific workflow. Today, we follow a particular workflow, and it follows a lot of the processes from the Conceptual Model, but we didn’t use to have a formalized workflow, especially for public forecasting. InfoEx was pretty structured, but the public forecasting process wasn’t.

There was a process. You’d go through the weather, the avalanches, write the snowpack summary, and then you would land on a snow stability rating and apply that across your region. But there were some problems with the snow stability system because it was being misapplied.

Snow stability is defined today as a point-scale observation. It’s something you can use within a 2-m2 area and it doesn’t include any sense of consequence. It has no avalanche size. It’s just what the stability is assessed to be at a single point, but we were applying it across whole regions, so there were some gaps. It was very common to read in InfoEx: “Poor stability but low hazard,” which meant the snow was unstable but there was no consequence to it. The public forecasts used the danger scale, which contained likelihood language but no sense of consequence and no process to determine what the danger rating was other than the same scale the public saw. We wanted to separate assessment and public communication.

Our objective was to try to pull all this together into a new system that was broader than stability. We wanted risk-based systems that included consequence. That was important. We wanted to get avalanche size involved in the assessment, and then bring everybody together to use a common framework.

How did that develop?

It really started in 2003. The Avalanche Risk Review, which I was not involved in preparing, the Number 1 recommendation out of 36 was to work with the CAA to revise the danger scale. I got hired to implement that report, but it was pretty clear to me this was a complicated

project and it needed to be done across borders. You don’t do that unilaterally.

I had two years to implement that report, so what we did is we developed a new system called the Backcountry Avalanche Advisory, and it was focused on communication. We had three levels of conditions, and we built those icons you see on the danger scale today.

Once that was done and the initial pressure was off to implement that report, I shifted to the danger scale. In talking with colleagues in the U.S., they wanted to do the same thing. Everybody recognized we needed to revise this thing. The danger scale had no avalanche size, so it

didn’t have any consequence. We wanted to separate the assessment and communication of avalanche danger. I started working on that in probably 2006.

How did the Conceptual Model flow out of that work on the danger scale?

It was never really a plan to do that. The new Canadian Avalanche Centre (CAC) formed an ad hoc committee. I was leading this committee and we pulled in some guys from the U.S. and started working. From my point of view, the first thing we needed to figure out if we’re going to redo the danger scale was, what actually is avalanche danger?

Everybody had different definitions of what danger, hazard, and risk meant. I realized right away I didn’t know the proper definitions of risk and hazard, which is kind of crazy when you think I’d been forecasting for 15 years and a CAA instructor for the previous decade. Our group was initially all over the place on this, so we started there. Let’s get on the same page with definitions and a risk-based framework.

Then I asked, “How do you determine avalanche danger? What’s your process?” Again, everybody had different processes and there was no consistent method someone could describe. It was funny, because we would usually all land in the same place, but in terms of how to get there, there wasn’t a single way we would follow.

Our objective became to first define the risk-based terminology and structure, then to build a workflow for avalanche hazard assessment, then revise the danger scale with a focus on public communication. It turned into a three stage project (Fig. 1).

How did you develop the workflow for the Conceptual Model?

There were a few things going on at that time. First, in 2004, Roger Atkins published a paper called An Avalanche Characterization Checklist for Backcountry Travel Decisions (ISSW 2004). It seems normal now, but at the time it was unique and a very interesting way to talk about backcountry avalanche hazard in terms of different kinds of avalanches that would result from different conditions and lead us to the

different terrain choices. It really resonated—I recommend anybody read Roger’s paper. Our framework initially used a concept called “Avalanche Character,” which later became the “Avalanche Problem Type.”

At the same time, I got pulled deep into a book by Steven Vick called Degrees of Belief: Subjective Probability and Engineering Judgement. That book changed my life as it exposed me to the world of risk and, in particular, subjective risk assessment. I learned about the large body

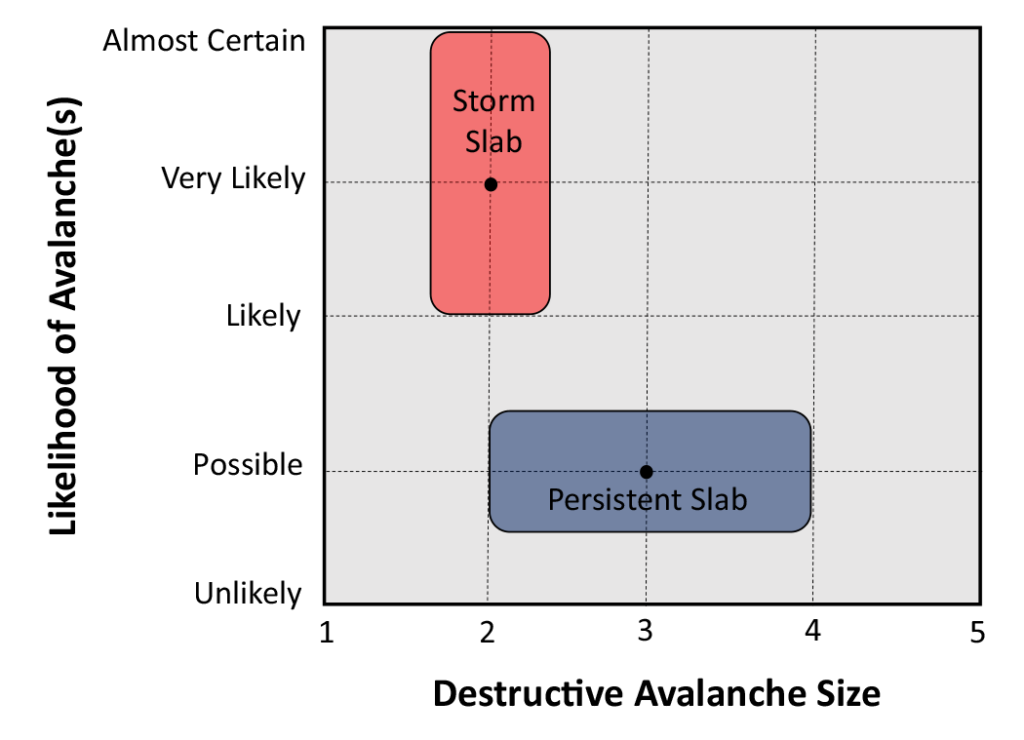

of science behind subjectivity. It described the process of decomposition, so taking a complicated problem and decomposing it into its basic components. We used that for avalanche danger. We started from the top with avalanche danger, and then we reverse-engineered it and broke it down into pieces, defined each piece, and then reassembled them into a risk-based framework that became the CMAH (Fig. 2).

We did that initially as this ad hoc committee. We started in 2005, but by 2007 it was pretty clear this was more complicated and we needed some funding. The CAC was applying for a SAR NIF (Search & Rescue New Initiatives Fund) project called Avalanche Decision-Making Framework for Amateur Recreation. One of pieces was to revise the danger scale; we got funding for several years and that allowed us to take it forward.

This project emerged from developing the (public) danger scale, but the Conceptual Model turned into something that could be used by all sectors of the industry. How did that come about?

You’re right. We were focused on public danger originally, but as we worked on avalanche hazard, the results seemed to apply to our forecasting more broadly than just the danger scale. As it gained some traction, we did some testing using a website Pascal Haegeli made, and we tested it with different groups of people in the industry. The workflow—the avalanche character, the components of hazard assessment—really resonated. The CAA saw it as an excellent tool for teaching and it became incorporated in the CAA training programs early on. This CMAH framework and risk theory in general became embedded in the development of the Level 3 course. InfoEx was being rebuilt at that time as well. InfoEx went from being a report that was mostly data columns and paragraphs into a more structured method, and that structure followed the CMAH. Software development for avalanche forecasting was new then, and if you’re a software developer and I handed you this model, you could program this into a computer. It caught on pretty fast.

We talked a bit about avalanche problems, but then size and likelihood are the two axes on the scale for hazard. It seems obvious now, but was it obvious at the time those are the two main factors for determining hazard?

No, it wasn’t obvious. That goes back to the beginning, when we weren’t all on the same page about what avalanche hazard was and what avalanche risk was. We didn’t have clean definitions of either, and we weren’t working off what I would today call a risk-based system. If you look at that matrix with likelihood on one axis and size on the other (Fig. 3), that’s basically probability and consequence. That’s a risk-based chart. The whole thing that underpins everything we did was the shift to risk-based thinking and how we first assess avalanche hazard, then incorporate exposure and vulnerability. Hazard assessment lands on avalanche hazard, but the actual decisions we make are based on risk and that must include the exposure and vulnerability of whatever element is at risk.

Avalanche hazard is Mother Nature. Then expose different things to that hazard: stick a highway in there, go heli-skiing, open a ski area—that’s exposure, and now something is vulnerable. It seems obvious and simple to me now, I guess, but at the time it didn’t.

I remember I drove my colleagues crazy during the first two years of this project because people thought I was wasting time working on those definitions. If you look at the various papers we published—we published three, one for each component—I’m the only author of the first one on definitions (ISSW 2008) and that’s because I was driving everybody crazy with my focus on definitions. I think they were going to jump ship, so I had to get that done fast so we could move on to the stuff everybody else wanted to work on. But it turns out, that was important.

How did you pull people around into accepting those definitions?

Slowly but surely, with a ton of meetings and presentations and a lot of listening. You and I are talking about something that took a decade to get through. This is the common way risk is defined and managed in other industries, but at that point, avalanche forecasting wasn’t risk-based. What was risk-based was the engineering side of avalanche work. The consultants and the engineers, they used risk-based systems.

Alan Jones helped me a lot because he is an engineer and was familiar with these concepts and used them in his day-to-day engineering work. I was trying to take those same concepts and plug them into backcountry avalanche risk and public avalanche forecasting. It was an awkward fit at first, but over time, as it became clearer, it made a lot of sense because it connected with how we actually do our work. Plus it mimics how risk is defined and managed in most other industries.

How did it evolve into becoming something that’s embedded in everything that’s done for avalanche forecasting today?

Well, it’s kind of funny. That was never the plan, right? I did get the sense as it was coming together, “This is pretty good, I think people are going to like it.” I didn’t expect it would turn into what it is today. InfoEx has a lot to do with that. That embedded it in Canada. For sure the publication of our paper embedded it outside of just Canada. It’s not perfect, that’s for sure, but it’s a conceptual idea of how to look at avalanche danger and risk, and how to fit it all together. I would say the acceptance of it was quick, but at the same time it was a slow process over years.

We’re talking about almost 20 years ago when we started working on it. It wasn’t immediate and it was a lot of work to bring the industry onboard. In the end, I’ve always really believed the systems that are good are going to—they need to—stand on their own feet. You have to build it, and then you have to put it out there and watch what happens in the real world. You have to listen closely to the feedback and incorporate it. If it’s good, people are going to use it. If it’s not good, they aren’t going to use it. That was always my rationale. Let’s build

something, put it out there, and watch what happens to it. And this thing got picked up.

I find it interesting the Conceptual Model started out of a project to revise the danger scale, but in the end, there is no direct link from the Conceptual Model hazard chart to the danger scale. Can you talk about why you didn’t include a link to the danger scale?

Good question. To be honest, I would say my Number 1 goal was to build an assessment method that linked to a danger rating. We were originally inspired by the Bavarian Matrix. It’s how the Europeans landed on their danger ratings. The first one I ever saw was in German, and they didn’t have an English version. Alan Jones got his mother to translate it for us.

That was our goal. In the end, we could have drawn the danger ratings into that hazard chart. In fact, we did do that (Fig. 4), but we decided to not just randomly draw lines and colours in there. We wanted to do it from data, and this is what didn’t work out in the end. The idea was, let’s collect data for a number of years as people use this thing, then let’s look for patterns, and we’ll be able to make the link to the danger rating. This was work that happened through Pascal Haegeli at Simon Fraser and his students. They did a bunch of data analysis to see if they could link the assessments to the danger ratings. That was our goal, but it didn’t work.

In retrospect, I do wish we had linked it. I think we should have drawn it in there. I can tell you roughly where the lines are: lower left corner is low danger; upper right corner is extreme danger, and then there’s some mix in between. It didn’t work out that way, unfortunately. It seems like low- hanging fruit to me.

What was the problem with collecting that data?

Well, we’re humans, not machines. I think this is maybe the biggest learning I’ve had out of the whole thing. Everything we read was if you develop a consistent workflow and a structured method that makes people more consistent, we will all consistently follow the same way and land in the same place. In the beginning, we didn’t have that workflow. You would discuss the snowpack structure and avalanche observations, and then you would pick a stability rating—you’d make one choice.

With the CMAH, there was now five to 10 choices to make. You’ve got to pick the avalanche problem type and the sensitivity, then you pick the spatial distribution, the likelihood and the size, then you choose the danger level. What that did in addition to bringing us all onto the same page, is it revealed our inconsistencies and individual tendencies as we debated each component. This is human nature and the CMAH was our tool that revealed it.

When Pascal and his students tried to put the data together and see what patterns led to what danger ratings, the data was quite scattered. There weren’t discernable patterns that could be linked to a danger rating.

How about yourself? You use the conceptual model when you forecast. How do you feel you when you sit down and use it? What are the things you like about it and what are the things that you wish you could do differently?

I’m obviously a little bit biased, but it’s got us all to understand the structure of avalanche hazard. I’ve been watching how similar work is evolving in Europe and they use a different method, but it’s the same thing. They’re landing on a likelihood and size framework to get to

avalanche danger. We’re all going in the right direction. Probably the thing that drives me the craziest is meetings where we’re talking endlessly about the location of a Size 1 wind slab. Is it a wind slab? Is it a storm slab? Is it even really a problem? I understand these are real questions, but sometimes I think we get caught up in stuff that doesn’t really matter. We seem to get bogged down easily in details that often don’t matter.

Sometimes people forget it’s a conceptual model. It’s not a physical model and it’s not a statistical model. It’s just a way of thinking about avalanche danger. One avalanche problem is going to overlap another, and there’s just not a clean line drawn down the middle. I think that’s hard for some people who are looking for black and white. This thing’s full of uncertainty, just like avalanches.

Of course, there’s little things I’d love to change. Overall, my biggest thing I wish we could do differently is accept the fact it’s conceptual and not get hung up on what seem to be details that might not be as relevant to what we’re doing that day.

You pointed out in your published paper that clear definitions on avalanche problems was something that needs to be determined, or when to transition from one problem to another. There’s definitely quite a bit of research on that. Another thing that’s come up is the clear definition of what ‘possible’ means, and what ‘likely’ means—defining those things. Do you ever wish there was clear definitions on those, or were you happy we need to study this more before we can actually specifically define all the terms used?

I wish we had better definitions for many parts of the CMAH, or I wish it could be more cut and dried. But like I say, it’s conceptual. I knew it was not going to be perfect even when we made it. This was just a step. If we can establish the basic framework of avalanche forecasting, then in the future, people will make it better. There will be more research, people will tighten this model up, it will evolve. I really had no sense this was the end. It is actually just another step along the way, and it’s going to get better.

In Europe, they have, what, five avalanche problems?

Correct. The European avalanche problems were developed for public warnings and ours were not. We did eventually want the danger scale to be the public product, but we were building something for forecasters to assess the hazard, so it was intended to be technical and as precise as we could make it.

Likelihood’s tough, too. Likelihood, as it is now, I consider it to be too top heavy. There’s too much likely, very likely, certain, almost certain. I wish we could readjust that one a little bit. I mean, the whole thing could use some work. There are always little things to fix up here and there.

If there is a CMAH 2.0, what would be the things you would want to see in it?

I would want to improve the likelihood assessment; I think it’s a Holy Grail, but perhaps elusive. I’ve been working on that with Scott Thumlert (and Bruce Jamieson) for the last bunch of years. But likelihood is a really complicated problem and I’m not sure we’re ever going to find a good solution for it inside the Conceptual Model. The move to put numerical values of probability on our hazard assessments is really hard because of scale issues and lack of good data. With every proposal on this, I find myself wondering how I could ever make that assessment? Numbers seem great on the surface, but you need to ask yourself how you’ll make the determination, and based on what? It has to work and be practical.

I’m watching some work in Europe right now, which I think is really going down the right road in terms of assessing likelihood across regions, which will ultimately have to come from snowpack modeling.

There’s work to do on the avalanche problems. I think we could have sub-categories for public forecasting so we don’t end up in this deep persistent/persistent dilemma that seems to be common. Persistent slab as a single category is much easier for the public to grasp.

I’ve got a long list of little things, nothing major though. I don’t know if I’m going to step into a Conceptual Model V2. It might be time to let somebody else do that, but I think it’s a really good question. I mean, these things have to evolve. Because I was the lead author, I often get asked, “Are you going to be updating it?” The answer at this point is, “No.” Not because I don’t think it needs it, but because I haven’t had the time and I’m not sure I’m the best one to lead a revision. I’ve had my say already, but I would like to contribute my experience so the same mistakes aren’t repeated.

I was really happy to see the inclusion of avalanche problems in OGRS this year. In the absence of this model living somewhere, it’s hard to update it unless you write a new paper. You could write a new paper and embed it into CAA training, but you need some pretty broad consensus to do that. Who’s going to do an update and who will manage it? I don’t know who would update it and where it would live. It needs it, but I don’t really know the structure of how to achieve this.

Those are my questions. Is there anything you think we missed talking about?

I was going to tell you the story about 2007. One of the questions you asked me before was did we get any pushback? There was lots. That’s part of it. If you can’t stand the pushback and defend your work with good logic and evidence, then it doesn’t deserve to stand on its own. In 2007, Clair Israelson convened this meeting he called the Senators Meeting. He gave me the floor for a whole day to present this model. It was totally intimidating. I was 38, everybody else is 50–60, my mentors. There I am, describing this completely different way of doing things. I remember at the end, the Chair of the CAA Technical committee tried to put people at ease saying, “Don’t worry, we’re not going to do this.”

I was pretty choked. I had just spent all day working through concepts and new ideas with people. It was just one of those things that at the time, it was such a different way of looking at things and people were nervous. He shook his head at me in disbelief after the meeting and said, “I can’t believe you’re doing this, really trying to change everything.”

We spoke later and he apologized for the shutdown. It’s hard to push new stuff through. You have to be able to back it up and you have to be prepared for people to roar at you. I had to learn to write science papers, for crying out loud. I’m a mountain guide, that’s not my background, but I had to learn to do it to push ideas through. Pushback is part of it and defending your work and making it stand on its own is pretty important.

Obviously you did a good job of that. Here we are today talking about it years later.

No kidding. Crazy.

REFERENCES

The following are the three papers that were written as part of this work:

Risk Framework and Definitions

Statham, G.: Avalanche Hazard, Danger and Risk—A Practical Explanation, in: Proceedings International Snow Science Workshop, Whistler, Canada, 21-27 September 2008, 224-227, arc.lib.montana.edu/snow-science/item/34, 2008.

Avalanche Hazard Assessment

Statham, G., Haegeli, P., Greene, E., Birkeland, K., Israelson, C., Tremper, B., Stethem, C., McMahon, B., White, B. and Kelly, J.: A conceptual model of avalanche hazard, Nat Hazards, 90, 663-691, doi.org/10.1007/s11069-017-3070-5, 2018.

Public Avalanche Danger Scale

Statham, G., Haegeli, P., Birkeland, K., Greene, E., Israelson, C., Tremper, B., Stethem, C., McMahon, B., White, B. and Kelly, J.: The North American Avalanche Danger Scale, in Proceedings International Snow Science Workshop, Lake Tahoe, USA, 17-22 October 2010, 117-123, arc.lib.montana.edu/snow-science/item.php?id=353, 2010.